Have you ever run into the "Blocked due to unauthorized request (401)" error in Google Search Console? It’s one of those moments where you’re left wondering what went wrong.

This error occurs when Googlebot tries to access certain pages on your website but is denied entry due to authentication issues. It’s a bit like being locked out of your own house—frustrating and inconvenient, especially when you’re trying to ensure your website is running smoothly. So here we go, what the error means, why it happens, and how you can tackle it with practical steps.

What Does a 401 Error Mean in Google Search Console?

A 401 error in Google Search Console essentially means that Googlebot attempted to access a page on your site but couldn’t get through because it encountered a barrier requiring authentication. Googlebot doesn’t log in or enter passwords, so it gets stuck when faced with login prompts, IP restrictions, or server configurations.

Here are common reasons for this error:

- Password-Protected Pages: When you’ve placed certain pages behind a login wall, Googlebot cannot access them. These could include admin panels, membership-only content, or development pages. If these pages accidentally appear in your sitemap or internal links, Googlebot will encounter this issue.

- Server Configurations: Server settings sometimes require authentication for pages that are meant to be publicly available. This often occurs due to misconfigurations during server updates or migrations.

- IP Restrictions: Websites that block specific IP addresses can unintentionally deny Googlebot access. This happens when your server or security system mistakes Googlebot’s IPs as suspicious traffic.

- Security Plugins: Security measures, such as firewalls or plugins, may incorrectly classify Googlebot as a malicious actor and restrict its requests.

- CMS Settings: Content management systems, like WordPress or Drupal, can have default settings or plugins that limit access to certain pages unintentionally.

Spotting Affected Pages

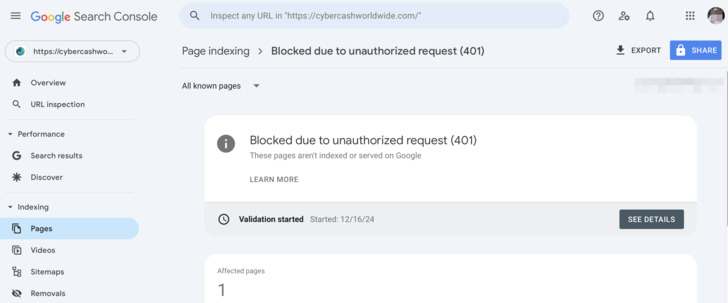

If you want to pinpoint the exact pages where the 401 error is occurring, Google Search Console makes it easy:

- Log in to Google Search Console: Access your account as usual and select the property associated with the website experiencing issues.

- Navigate to the Page Indexing Report: Click on "Pages" under the "Indexing" section to review the overall status of your site’s pages.

- Locate the Error: Look for entries labeled "Blocked due to unauthorized request (401)." These will usually appear under the "Not Indexed" section.

- View Affected URLs: Click on the error message to see a detailed list of URLs that are causing the issue. Copy these URLs to keep track of which pages need adjustments.

By identifying the problematic pages, you can focus your efforts on resolving access issues where they matter most.

Common Scenarios Causing 401 Errors

Let’s break down some real-life scenarios where a 401 error might crop up:

- Private Member Areas: If you have sections of your website reserved for logged-in users, such as dashboards or exclusive content, these pages will naturally block Googlebot. However, problems arise when these private URLs accidentally appear in your sitemap or are linked from public pages.

- Development Pages: During website development, password protection is often added to staging or testing pages. If these links are left in navigation menus or internal links, Googlebot will try to access them and get blocked.

- Security Overreach: Firewalls or security software might block Googlebot due to misconfigured settings. This is especially common when new security plugins or updates are applied without checking their compatibility with search engine crawlers.

- Server Updates: After moving to a new hosting provider or making updates to your server, default settings might restrict access unintentionally. For example, some servers block all requests that don’t explicitly pass a certain set of headers.

These scenarios highlight why keeping track of website configurations and updates is necessary to avoid issues.

Fixing 401 Errors in Google Search Console

Resolving a 401 error depends on identifying the specific cause. Here are practical steps for addressing this issue:

- Review Page Permissions: Double-check the permissions for the affected pages. Remove login requirements from public-facing pages. For private pages, ensure they’re excluded from sitemaps and internal linking structures.

- Adjust Server Configurations: Use server logs to find out why Googlebot’s access is being blocked. Update your server’s rules or authentication settings to ensure public pages are accessible.

- Whitelist Googlebot: Add Googlebot’s user agent and IP ranges to your server’s allowlist. This ensures that Googlebot isn’t mistaken for malicious traffic.

- Inspect CMS Settings: Review your CMS’s content visibility settings and plugins. Disable or reconfigure plugins that restrict access to publicly intended content.

- Test with URL Inspection Tool: Use the URL Inspection tool in Google Search Console to view how Googlebot sees the page. Check for specific errors or restrictions and request re-indexing after resolving them.

Steps for Resolving Common Issues

Here are practical actions you can take:

- Remove Login Prompts from Public Pages: For instance, check if your blog or product pages are accidentally behind authentication barriers. Public content should not require a username or password.

- Reconfigure Security Plugins: Access your security plugin settings and ensure that Googlebot’s user agent isn’t blocked. If needed, add exceptions for Google’s IP ranges.

- Fix Robots.txt File: Check your robots.txt file to ensure it doesn’t block Googlebot from crawling critical sections of your site. Avoid using "Disallow" directives on URLs you want indexed.

- Adjust Meta Tags: Ensure that your pages don’t include meta tags like "noindex" unless the content is explicitly meant to remain hidden from search engines.

- Use Debugging Tools: Tools like cURL or Postman can help simulate Googlebot’s request and identify where access is denied.

Preventing Future 401 Errors

To keep these errors from happening again, implement proactive measures:

- Schedule Regular Audits: Review your site’s access settings and permissions monthly. Check for unexpected login prompts or restricted pages.

- Update Sitemaps Regularly: Whenever new pages are added or old ones are removed, ensure your sitemap reflects these changes accurately.

- Monitor Security Settings: After every update to security plugins or firewalls, review the settings to confirm Googlebot is not restricted.

- Whitelist Search Engine Crawlers: Configure your server or CMS to explicitly allow traffic from search engine bots. This ensures smooth crawling even after updates.

- Document Changes: Keep a record of server updates, CMS changes, and plugin installations to troubleshoot future errors effectively.

Tasks for Long-Term Maintenance

Here are tasks to incorporate into your maintenance routine:

- Monthly Permission Reviews: Ensure that public pages are not unintentionally password-protected. Check key sections of your site, such as blogs, product pages, or landing pages.

- Quarterly Sitemap Updates: Audit your XML sitemap quarterly to verify that it includes all necessary public pages and excludes restricted ones.

- Annual Server Configurations Review: Check server rules and access control settings annually to ensure they align with your website’s structure and content.

- Debug URL Access on Demand: Use tools to simulate bot behavior whenever new errors appear in Search Console. Identify the cause promptly and resolve it.

Wrapping Things Up

The 401 error in Google Search Console indicates that Googlebot is being denied access to certain pages on your site. By identifying the causes, such as password protection, server restrictions, or plugin misconfigurations, and taking proactive measures to address these issues, you can ensure that your site remains accessible to search engines.

With regular audits, clear documentation, and robust testing methods, you can minimize the occurrence of these errors and maintain your website’s search visibility.

Did You Know You Already Have A LOT To Sell?

So What's Your Problem?